A website copy with HTTrack

Extreme World November 2002

project name: extremeworldWeb(URL) address: www.extremeworld.com

amount of time: 1 hour (56k modem)

in the scan rules, add:

-*.rm (to avoid Realplayer movies)

Read the page about Web Spider Traps,

then, in spider tab, select

no robots.txt rules

problems:

- Missing images and videos because of robot rules

- javascript image gallery

Other examples with similar difficulties: Alton Towers | Herberton | Martin Luther King 2004 | Martin Luther King 2002 | Recycling | Canobie

solutions:

- If the option "no robots.txt rules" has not been selected, most images and video files are missing at the end of the capture.

Select "no robots.txt rules" and continue interrupted download and connect to finish the capture. - The image galleries only show the first photograph. A javascript script controls the manual slideshow.

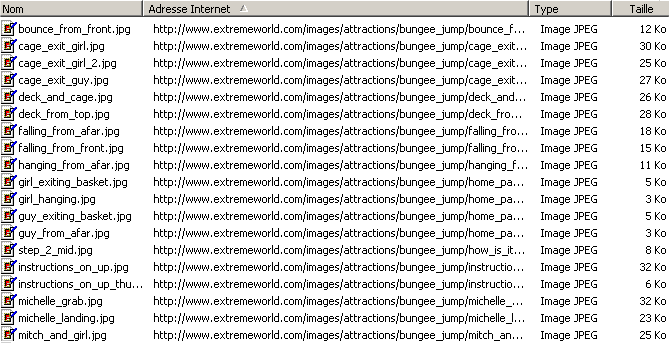

Visit the site and load all the images by viewing all the image galleries. - In the cache (Temporary Internet Files) you should find:

Copy all the images of all the slideshows (here the bungee jump gallery) in the capture folders, then delete [1] in the file names.

Now, you can browse the mirror offline.

Top of the page

Top of the page